CASSIE: Curve And Surface Sketching in Immersive Environments

Abstract

We present CASSIE, a conceptual modeling system in VR that leverages freehand mid-air sketching, and a novel 3D optimization framework to create connected curve network armatures, predictively surfaced using patches with $C^0$ continuity. Our system provides a judicious balance of interactivity and automation, providing a homogeneous 3D drawing interface for a mix of freehand curves, curve networks, and surface patches. Our system encourages and aids users in drawing consistent networks of curves, easing the transition from freehand ideation to concept modeling. A comprehensive user study with professional designers as well as amateurs (N=12), and a diverse gallery of 3D models, show our armature and patch functionality to offer a user experience and expressivity on par with freehand ideation, while creating sophisticated concept models for downstream applications.

Video preview

Short summary

3D sketches contain lots of 3D information, yet their lack of structure (a soup of stroke primitives) makes them difficult to re-use in downstream processes, such as the creation of a 3D model.

Humans, especially when not experienced with VR sketching, are inherently not very good at precisely sketching in 3D. This is one of the root to that “lack of structure” problem: it is pretty hard to sketch intersecting strokes in 3D.

Attempt at drawing a square freehand in VR

So we propose to augment VR sketching with automatic neatening and structuring of strokes. Each stroke that the user creates is minimally reshaped to intersect with nearby strokes.

Structuring the sketch on-the-fly enables us to build a curve network representation of the sketch, which we can use to infer surface patches.

This helps with occlusion, making the sketches easier to visually parse. It also enables using sketching as a medium for 3D modelling: the result is a 3D surface!

Sketches done with our system by 2 of the authors and 1 participant

So the 3D surface can be used for fabrication, or further refinement via sculpting. NB: we don’t guarantee 3D-printability properties for the resulting mesh, but simple remeshing in eg Meshlab does the trick.

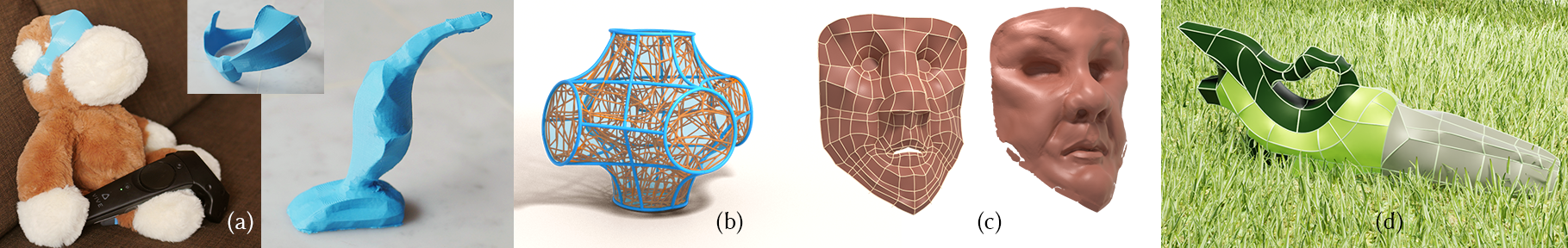

(a) 3D prints, (b) structural analysis and truss generation, (c) fine sculpting in ZBrush, (d) presentation rendering.

Automatic neatening and surfacing features offer a trade-off: loss of user control over their strokes VS a neater, fleshed out result. We investigate in a user study whether this holds up against unconstrained 3D sketching, and find out that users rate our system on-par with freehand sketching on a scale evaluating creativity support (CSI).

We think that understanding 3D sketches and using 3D sketching as a modelling interface are very exciting avenues of research and hope to support future effort by releasing the code for our Unity prototype application, as well as all data from the sketches done during the user study, and those showcased in the paper.

You can explore the sketches in 3D here.

CHI presentation

Supplemental videos

Acknowledgments

We thank our study participants for their time and effort, and the anonymous reviewers for their helpful comments. This work was supported by ERC Starting Grant D3 (ERC-2016-STG 714221), NSERC Discovery Grant 480538, and by software and research donations from Adobe.